In the last installment of this blog series on being successful with digital analytics, I discussed why your team should strive to be a profit center versus a cost center within the organization. The profit center vs. cost center concept will be a recurring theme in this series, but in this post, I’d like to talk about the infamous Solution Design Reference or SDR for short. While there are many out there who love their digital analytics SDR, I am going to share why I have seen this document be problematic and how you can mitigate its shortcomings.

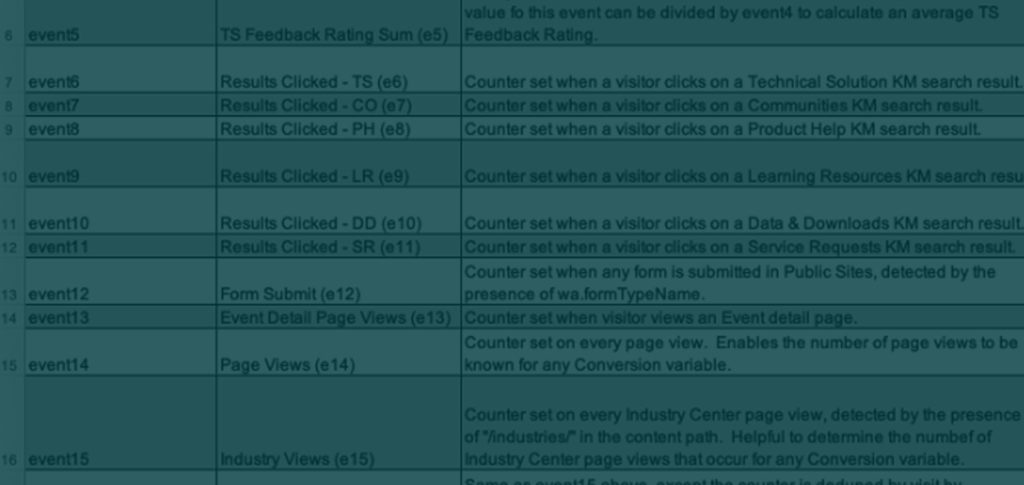

If you are reading this, the odds are that you know what a digital analytics SDR is, but just in case, it is a document (usually a spreadsheet) that lists all of the data points that you are tracking or intend to track in your digital analytics implementation. Some people refer to it as a “tagging document” as well. If you are an Adobe Analytics customer, it lists the Success Events, eVars and sProps related to your implementation. If you use Google Analytics, it contains things like Goals and Dimensions. My contempt for this document is not based upon the document itself – there is no reason why you should not be documenting what data points you are collecting and in which variables. My issues with the SDR are that most organizations:

- Don’t keep it updated

- Use it in place of identifying actual business requirements

On the first point, most organizations create their SDR when they first implement and then forget about it. Websites change all of the time and, thus, the data you collect related to your website (or mobile app) must change. When I was in my “Wolf” role at Omniture, I would say that over 90% of the time that I began working with a troubled client their SDR was years old. Often times developers had “gone rogue” and started allocating variables left and right that no one knew about. This became even worse when organizations had multiple websites/apps and had different SDR’s for each. This meant that they could not easily merge their data together and see rollup totals. Since the SDR is a document wholly separate from the digital analytics tool, it is easy to see how its information could diverge from what is actually in the tool.

However, my true disdain for the SDR comes from the fact that it only tells you WHAT data is in your implementation, not WHY it is there. For example, if your SDR lists that you are storing “Internal Search Keywords” in eVar5, that is good to know, but doesn’t tell you or your stakeholders why you care about internal search keywords. Are keywords being tracked to see which ones are the most or least popular? Are they being tracked to see which keywords produced zero search results? Are they tracked to see which keywords eventually lead to orders and revenue? To see from which page each keyword was searched to identify opportunities to improve page-specific content? Or is it all of the above? How are your analytics stakeholders and users supposed to know what to do with “Internal Search Keywords” data if none of this is defined?

I have found that SDRs tend to give analytics teams permission to be lazy about their implementation. They can say that they have documented it by pointing to the SDR and tagging specifications, but they haven’t gone the extra step to dig into WHY data is being collected. When I begin working with clients in an analytics consulting capacity, the first thing I request is any documentation they have on their implementation. Ninety-nine percent of the time, what I get is an outdated SDR and a file that contains the old code used for the initial implementation (years ago!). If you were honest, would you have anything better to provide me?

Action Items

Here are some homework assignments that will help when we get to the next post:

- Update your SDR. Make sure you have an up-to-date list of all of your data points. Make sure this is 100% in sync with what is in your digital analytics tool. If you are an Adobe Analytics user, you can download the latest variable information using this site: https://reportsuites.com/

- While doing this, make sure that your implementation is identical across all of your different report suites/profiles. It is not advisable to have different variables in different slots in different data sets. If one dataset requires more variables than another, simply ignore or hide the variables not needed in the more basic dataset but continue to label the variables the same everywhere. That will prevent people from causing conflicts the next time they need a new variable.

In the next post, I am going to dig into how you can identify WHY you are tracking things and use that information to improve your SDR and actually make it something of value.